As businesses grow increasingly curious about integrating AI-powered agents into their operations, ensuring these agents are reliable, accurate, and efficient has never been more critical. The latest Agentforce Testing Center release from Salesforce allows organizations to test and improve their AI agents in a secure setting before they are deployed into live environments. Lane Four has been able to experience firsthand how effective this testing tool is prior to launching our initial client Agentforce implementations.

With features such as synthetic data testing, secure sandbox environments, and analytics, the Agentforce Testing Center is intended to provide a framework for organizations to build AI-driven automation with confidence that their agents will be more helpful than harmful to their operations.

The Challenge of AI Reliability

One of the biggest hurdles to widespread AI adoption is the unpredictability of AI agents. From generating misleading or incorrect outputs (often called “hallucinations”) to causing operational disruptions—such as misclassifying data or failing to retrieve the right knowledge articles—many organizations hesitate to fully embrace AI due to concerns about trust and consistency.

We’re also still riding the Agentforce wave and learning as we go, but one thing is clear—traditional testing methods simply can’t keep up with these challenges. To ensure AI agents perform reliably in production, they need to be validated in sandbox environments that accurately simulate live interactions. At the same time, businesses need clear visibility into performance, compliance, and security risks.

Designed to eliminate uncertainty, the Agentforce Testing Center provides a structured, closed-loop testing framework within the Salesforce ecosystem, allowing businesses to refine their AI agents and gain more confidence before deploying them into production. What’s so special about the Agentforce Testing Center?

Salesforce’s Agentforce Testing Center introduces a new standard for AI lifecycle management by incorporating:

- Data & Interaction Testing: Simulated test cases allow organizations to rigorously evaluate AI agents and real-life customer interactions without impacting live operations

- Secure Sandbox Environments: Salesforce offers Data Cloud Sandboxes as well, allowing AI models to be refined and tested in isolated environments, reducing risk before full-scale deployment

- Advanced Analytics & Audit Trails: Detailed reporting and monitoring tools like Agentforce Analytics and Utterance Analysis ensure transparency, allowing teams to make data-driven optimizations based on granular metrics

- The Einstein Trust Layer: A built-in safeguard that enhances AI reliability by providing robust feedback mechanisms and compliance tracking

How It Works: Setting Up AI Testing in Agentforce Testing Center

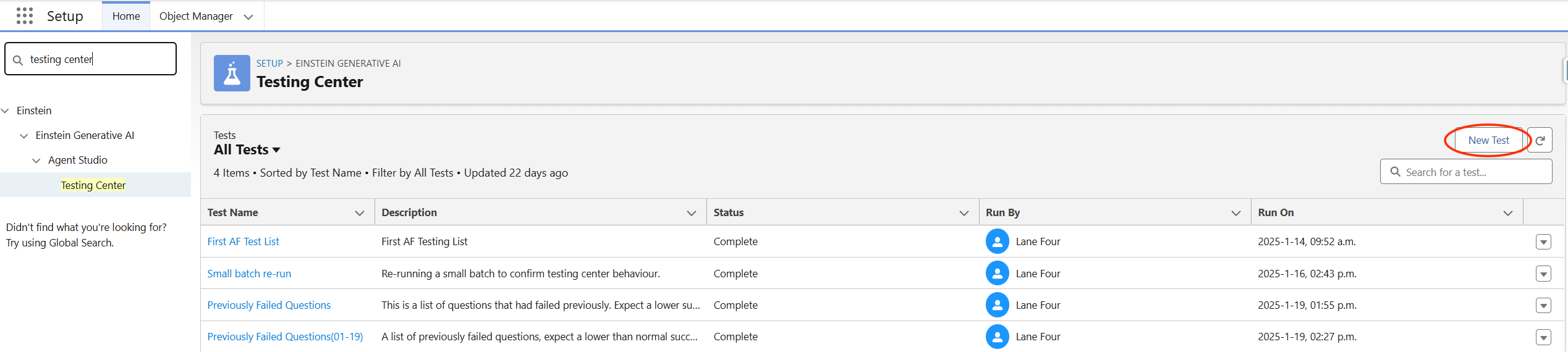

Getting started with the Agentforce Testing Center is actually quite straightforward. Within the Agent Studio setup, users can create and execute test scenarios using structured templates. The process involves:

- Create a New Test: Access the Testing Center through the Agent studio. Define a test name and assign it to a specific AI agent. There may be multiple agents listed here, of course, depending on how many were created.

Source: Lane Four

2. Upload a Testing Template CSV: This structured spreadsheet file can be downloaded from the “New Test” pop-up (though we recommend having this downloaded and shared with your team as well), and it outlines test parameters across four key categories:

- Utterance: The input prompt to be tested

- Expected Topic: The intended workflow path

- Be sure to use the API name, not the topic name

- Expected Actions: The AI functions the agent should trigger

- While it may seem intuitive that an AI agent triggering the right topic would also take the correct actions, the Testing Center requires explicitly defined expected actions for validation. This can mean something like “Use Knowledge,” “Create Case,” or “Escalate Issue”

- Expected Response: A descriptive summary of the anticipated output

Source: Lane Four

3. Execute Tests & Analyze Results: Once the test runs, outcomes are evaluated based on pass/fail criteria, with response accuracy verified by an AI-driven assessment rather than strict text matching. More below on this.

4. Iterative Refinement: Organizations can fine-tune AI models by analyzing failure cases and adjusting prompts, workflows, or agent logic accordingly.

Tests run quickly and at scale, giving organizations an efficient way to refine AI reliability before deployment. While speed may vary based on test volume, AI testing remains significantly faster than traditional methods—measured in seconds rather than minutes or hours!

Interpreting Test Results: What Pass/Fail Really Means

AI models inherently introduce a degree of variability—particularly for conversational AI. Unlike deterministic software tests, where a 100% pass rate is often expected, AI testing must account for nuanced response variability while ensuring functional integrity.

- Canned Responses (Static Outputs): These should achieve near-100% accuracy since they follow predefined templates

- Conversational AI Responses: Some degree of variation is expected, and a 95-98% pass rate may still be acceptable even though it would be reflected as a fail!

- Knowledge-Based Queries: Responses should be contextually accurate, even if phrasing varies slightly

Since pass or fail results are highly contextual, each organization needs to define what they truly mean and how to use test results. While red and green indicators can feel definitive, they don’t tell the whole story—thankfully, this isn’t Squid Game. Instead of expecting absolute consistency, businesses should have these important internal conversations and establish meaningful pass/fail thresholds that align with their needs, rather than assuming anything below 100% means the AI agent is unreliable or can’t be used. From there, expect to make the necessary iterations to your agents as needed.

Source: Lane Four

Strengths, Limitations, and Our Candid Take So Far

From our initial experience, the Agentforce Testing Center is highly effective at what it’s designed to do—ensuring AI agents deliver accurate, reliable initial responses. Accuracy isn’t a major concern at this stage, and for validating how an AI agent handles its first interaction, the tool has proven invaluable based on our early discoveries. After all, this is where AI is most likely to go off track.

However, one key limitation is that the Testing Center currently doesn’t support multi-step interactions. While it excels at verifying initial responses, it doesn’t yet assess how an AI agent navigates a complex conversation with multiple backs and forths. This will be an important area for future enhancements, especially as businesses look to deploy AI in more advanced, real-world scenarios.

Another factor to consider is that solidifying data categories on Knowledge Base items are essentially a prerequisite for using the Testing Center effectively. This could pose an extra setup challenge for some teams, but at Lane Four, we’ve developed an approach—using AI—to streamline this process. It’s just one of the ways we’re helping businesses optimize their Agentforce implementation for long-term success.

Some Final Thoughts

So, why does this new way of testing matter to businesses again? The launch of the Agentforce Testing Center signals Salesforce’s commitment to making AI adoption scalable, reliable, and cost-effective. For organizations leveraging Agentforce for customer interactions, support automation, or knowledge management, this tool offers a structured way to validate AI before it reaches customers exactly as the name suggests.

By adopting a rigorous testing framework, businesses can:

- Reduce AI-related risks before deployment

- Optimize AI agent performance through iterative refinement

- Ensure compliance & governance using structured audit trails

While the Agentforce Testing Center marks a significant advancement in AI Lifecycle Management, we believe ongoing iterations will be key to enhancing multi-cloud compatibility, expanding testing capabilities, and integrating deeper AI trust mechanisms. As AI adoption accelerates, businesses must remain agile in their approach to AI validation and refinement.

But for now, we’re not going to let that stop us at Lane Four and will continue actively testing and implementing Agentforce capabilities to help our clients maximize the possibilities of how AI can help them work smarter. Interested in exploring how AI agents can elevate your business or want to learn more about how we’ve made the AI testing process even smoother for our clients? Let’s chat!